Effective Quiz Design

When choosing to use a quiz for an assessment task, the most important question to ask is whether or not a quiz is the correct choice for this particular task. This will depend on the Learning Outcomes of the unit and if they can be effectively assessment using multiple choice questions. It also depends on the other assessments in the unit and / or major. Before adding a quiz to a unit or changing an existing assessment to be a quiz, consider these elements of the unit.

Choosing to use a quiz

As formative assessment

Used formatively, quizzes can:

- Engage students in actively reviewing their own learning progress (Velan et al., 2008).

- Trigger collaborative learning activities

- Provide opportunities for collaboration

- Create activities that disrupt the traditional agency of assessment (Fellenz, 2010)

- Allow for instant or rapid feedback on student performance

As summative assessment

Used summatively, quizzes should:

- Test the lesson or unit objectives

- Be scaffolded

- Cover the scope of the entire unit/ part of unit

- Be part of a variety of assessment types

Benefits of quizzes

- Items can measure learning outcomes from simple to complex

- Incorrect answers chosen by students provide diagnostic information

- Scores can be more reliable and objective than subjectively scored items

- Marking is less time consuming

- Items can cover a lot of material very efficiently

- Items can be written so that students must discriminate among options that vary in degree ofcorrectness (Zimmaro)

Limitations of quizzes

- Constructing good items is time consuming

- Items are often ineffective for measuring some types of problem solving and the ability to organise and express ideas

- Scores can be influenced by reading ability

- Items often focus on testing factual information and fail to test higher levels of cognitive thinking

- Sometimes more than one defensible “correct” answer (Zimmaro)

Purpose of quizzes

Testing understanding

Questions require the learner to recall or comprehend learned information without application of a concept

Example

Which of the following ordered elements are required to cite an article from a printed newspaper in conformity with AGLC4?

Select one:

- Author, name of article, name of newspaper, publisher, place of publication, date of access, pinpoint.

- Author, name of article, name of newspaper, place of publication, date of publication, pinpoint.

- Name of newspaper, author, name of article, place of publication, date of publication, pinpoint.

- ‘Editorial’, name of article, name of newspaper, place of publication, date of access, pinpoint.

Testing Higher Order Thinking

Questions require the learner to use prior knowledge:

- to apply new information by incorporating relevant rules, methods, concepts, principles and theories

- understand components of a concept

- identify relationships

- analyse, synthesise and/or evaluate (Zaidi)

Types of questions:

- Premise - Consequence

- Case study

- Incomplete Scenario

- Problem/Solution Evaluation

Example

What kind of fallacy is this:

"Most people think iPhones are the best phones, so they must be the best.“

Select one:

- Argument from Ignorance

- Appeal to Popularity

- Appeal to Tradition

- Appeal to Pity

Writing effective questions

Steps in Quiz Design

Effective Quiz Analysis

Once a quiz has been taken by students, effective quiz analysis can provide an understanding of problematic areas in the quiz that can then be adapted for future deliveries. The analysis methods outlined below may require a certain number of responses to provide sufficient data.

Item difficulty

Item difficulty identifies the percentage of students that correctly answered the question correctly and is also known as the P Value.

This results in a range from 0% to 100% (0.0 to 1.00)

To calculate the item difficulty, the number of students who got an item correct is divided by the total number of students who answered it.

Values above 0.90 can signify easy items and should be reviewed for obvious distractors or a concept which does not need to be tested. Values below 0.20 can signify difficult items and should be reviewed for confusing language, highlighted as an area for reinstruction, or removed from test.

The ideal value for item difficulty is slightly higher than midway between chance (1.00 divided by the number of choices) and a perfect score (1.00) for the item.

Item discrimination

Item discrimination identifies the relationship between how well students did on the item and their total test score. It is also referred to as the Point-Biserial correlation (PBS)

It results in a range from –1.00 to 1.00.

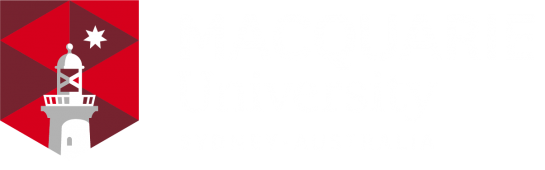

Calculation:

Impact

A higher value indicates a more discriminating item and ideally the value will be as close to 1.00 as possible although a value over 0.20 is acceptable.

Values near of below zero indicate that students who overall did poorly did better on that item than students who overall did well. This indicates that the item may be confusing for the better scoring students in some way and should be revised or removed from the test.

Reliability coefficient

The reliability coefficient is a measure of the amount of measurement error associated with a test This indicates how well the items are correlated with one another

It results in a range from 0.0 to 1.0.

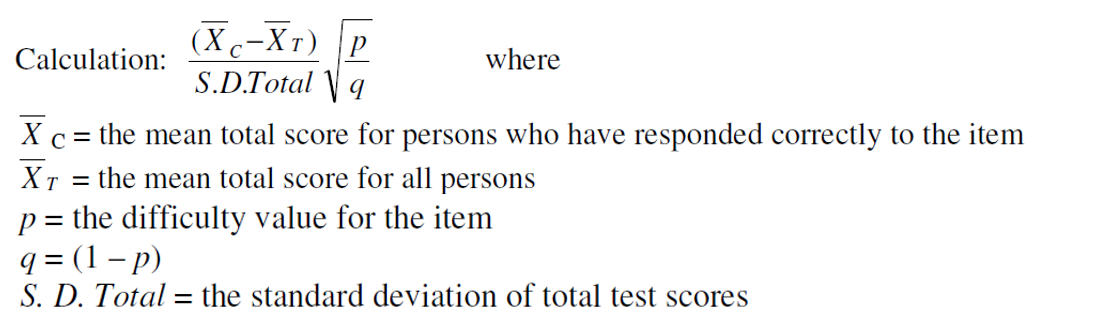

Calculation:

A higher value indicates a more reliable overall test score and indicates that that the items are all measuring the same thing, or general construct. The ideal value is 1.0 and any value over 0.60 is acceptable.

When the reliability coefficient indicates that a test is unreliable, it is possible to improve reliability by increasing the number of questions or using items that have high discrimination values.

Distractor evaluation

Distractor evaluation is the frequency (count), or number of students, that selected each incorrect alternative or distractor. The number of people choosing a distractor can be lower or higher than the expected because of partial knowledge, a poorly constructed item or the distractor being outside of the area being tested.

To complete distractor evaluation, create a frequency table which displays the number and/or percent of students that selected a given distractor.

Each distractor should be selected by at least a few students and ideally all distractors should be equally popular.

Distractors which are selected by only a few or no students should be removed or replaced. Similarly, distractors which are selected by as many or more students than the correct answer.